DM WebSoft LLP exceeded our expectations! Their seasoned team of experts delivered a website that perfectly captures our brand essence. Their 15+ years of experience truly shine through in their exceptional web development skills.

AI in Content Moderation: Balancing Freedom and Safety Online

Introduction

The internet poses a challenge and at the same time an opportunity. With a virtual world holding a lot of weight in the existence of human beings, probably the need to support an open and all-inclusive online space has never been more emphasized. Content moderation is a very complex and dynamic process that requires human judgment backed up by leading technologies. Content moderation safeguards space on the internet and is at the forefront of its endeavor. Add in AI content moderation, and that is one powerful cocktail mix of artificial intelligence and machine learning designed to stay on top of the increasing complexities of online content.

At DM WebSoft LLP, we use AI to develop leading solutions that strike the right balance between freedom and security in order for the digital world to become a place of productive and meaningful interaction. Our commitment to AI innovation in content moderation underscores our commitment to a safer, more open internet.

The Challenges of Online Content Moderation

In the huge universe of the Internet, content moderation is presented as an impossible task, most needed to guard the digital ecosystem, but full of several challenges. The nature of such platforms makes them open forums for creativity, expression, and connection, and therefore they are most vulnerable to the mass production of all kinds of dangerous content. Be that as it may, the bottom line should be based on the most effective strategies of moderation to make sure that this dichotomy of keeping users safe and not stifling a vibrant exchange of ideas is followed.

Volume and Velocity

The content is continuously flowing; that literally means every second it is adding up. Social media, forums, websites; loaded with text, images, videos, comments flowing in like there is no tomorrow and waiting to be scrutinized.

This deluge is not constant but rather ebbing and flowing, at times growing into overwhelming tsunamis of data following trending events or viral content and thus testing the scale and responsiveness of the moderation systems.

Context and Cultural Nuances

Content does not float in the air but exists in an environment that gives it meaning. Content can thus get interpreted very differently, depending on cultural, regional, and personal contexts. What one culture may find injurious or offensive to one’s sensibility, another may portray in a perfectly normal way. The moderation needed is nuanced to the level of very complex AI systems that understand subtleties—even possibly being human moderators—able to interpret or discern context.

Evolving Standards and Regulations

The social digital landscape is not static in nature and is being constantly redefined by evolving societal norms and legal frameworks. What today could be seen as an acceptable post may in a short while become unacceptable, thus plunging the content moderation policies into unending adaptation.

It gives an added level of complexity in the development of current and effective moderation algorithms and guidelines.

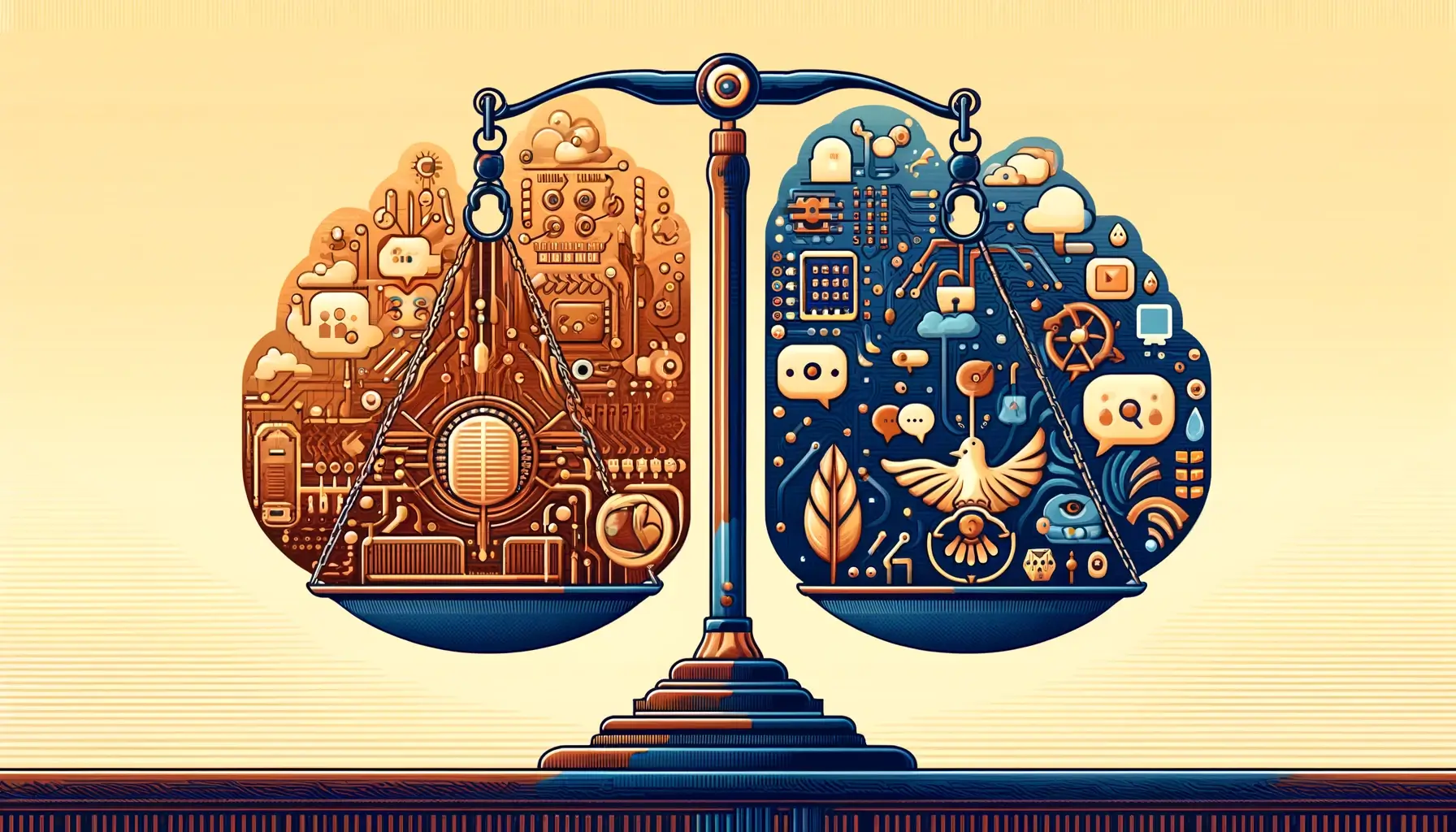

Balancing Act: Freedom vs. Safety

The key tension of content moderation is the fine line between safeguarding online communities and guaranteeing freedom of expression. Over-moderation, on the other hand, may impose burdens on discourse and innovation that are not warranted. There is a risk that any harmful type of content will simply run wild, therefore endangering the users.

This is perhaps the most difficult task of all in achieving this balance with a fine approach towards respect for diversity of opinion and on the other hand, ensuring user safety.

The Role of AI in Addressing These Challenges In the backdrop of the dystopian surveillance landscape, AI content moderation beacons with the promise of rendering scalable, efficient, and ever-more sophisticated solutions. As AI algorithms learn from ever more available data, they adapt, understand context, and recognize harmful patterns. And sometimes, if anything, they spot a problem before it is really an issue. But AI in content moderation isn’t meant to be a panacea; it’s one part of a larger whole that could multiply the effectiveness of moderation efforts if deployed thoughtfully with human oversight.

At DM WebSoft LLP, we recognize these challenges and are keen to push the limits of AI in content moderation. Our solutions are designed not only to react to the contemporary landscape but to anticipate and develop along with the changeable dynamics of the online world to make sure that safety and freedom are in harmony at digital spaces we help people nurture.

Artificial Intelligence in Content Moderation

However, when they enter the game and have to climb over the parapets of content moderation online, artificial intelligence appears to be an able ally, brandishing its computational sword to slash through the digital plane with a far-unseen perspicacity. This section shines a light on the drastically transformed role of AI in redefining content moderation and sets foot in a new era of precision and effectiveness.

The AI Advantage in Content Moderation In the center of the AI impact on content moderation is the ability to sift through so much data at a speed incomparable to human capability. AI algorithms, compared to the traditional moderation processes, which are heavily relied upon by the human workforce and manual reviewing, can monitor, analyze, and make decisions adequate for the constant and increasing tempo of new online content.

This is a very crucial capability in an environment of now or never, where the time limit window is too small to mitigate any prospective harm.

Understanding Context and Nuance

But the major developments would point out that AI content moderation is getting better and better at understanding not just context, but nuance. Models were fine-tuned against many data sets to become very good at picking up on subtle language nuances, sentiment, and cultural differences. This progress goes to address one of the touchiest points of content moderation: the variability of context that leads the AI system to make more informed, nuanced decisions.

Learning and Adapting

AI can learn and improve over time, as opposed to static moderation rules. Over time, patterns in the feedback together with its outcomes are analyzed to allow AI models to iterate their feedback algorithms towards improved precision and effectiveness.

This process of dynamic learning ensures that AI moderation systems remain sensitive and adaptive to new challenges, evolving threats, and even shifting societal norms.

Combining AI and Human Expertise

However, despite all the advancements that AI is making, the human judgment factor still forms the bedrock of good content moderation. AI can do well with routine content in bulk and large-volume filtering, while human moderators bring priceless insights in intricate and sensitive matters.

Built for collaboration, this empowers and scales the best of both worlds in ensuring moderation strategies are scalable and empathetic.

DM WebSoft LLP’s Approach to AI-Powered Content Moderation

We, at DM WebSoft LLP, are harnessing the strength of AI to make content moderation solutions that are far from reactive and rather very proactive. Our AI models stay one stride ahead to match the potential problems in the digital ecosystem and adapt to scaling their solution, which protects the online community. Powered by sophisticated AI and our profound knowledge of the digital ecosystem, we empower platforms to create spaces where freedom and safety live together, thus re-emphasizing our promise of developing online well-being through technology.

This clear, detailed look at subtleties of AI in content moderation will show quite clearly that it is a journey of constant innovation and adaptation. What follows is more on the effect of AI on online safety and the fine balance of freedom and moderation that DM WebSoft LLP is laying down towards a safer and more inclusive digital future.

The Role of AI in Enhancing Online Safety

This becomes all the more important in the age of digital technology, when the online platform has become the base of our communication, commerce, and community. Artificial intelligence (AI) has, therefore, become very prominent in the area of ensuring online safety, because of offering a new set of solutions that can protect the multitudes of users against the serious risks that they are facing. This section elaborates on the pertinent role of AI in enhancing online safety that would turn the internet into a more secure and trusted environment.

AI as a Sentinel of Online Safety

AI in content moderation becomes a digital sentinel, constantly vigilant on behalf of the safety of the users. Modern algorithms and great abilities given by machine learning make it possible for this AI system to detect and get rid of all potential threats incomparably faster than, let’s say, in the case of cyberbullying, hate speech, phishing, or fake profiles.

In today’s fast-moving digital world, which is full of pitfalls, this approach to safety is essential.

Precision in Threat Detection

Trends and even very small patterns or anomalies that develop in a menacing body of online content or in an individual’s menacing online behavior are applicable to AI. Artificial intelligence can even pick out the difference that eludes a more traditional, rule-based system. This is the unique type of accuracy necessary to take on the new, ever-changing types of cyber threats that change continuously to go unnoticed.

Adaptability and Scalability

The digital landscape changes by the day, and there are all kinds of platforms, technologies, and manners with which to engage people. In that light, AI adaptability is turning into an important asset for the modification of the content moderation system in parallel with natural evolutions within the digital ecosystem.

This is even assured by the fact that, though the volume of content online keeps growing exponentially, security measures are an effective way of protecting information that is flowing through the internet.

Enhancing User Trust and Experience

AI also takes a direct part in improving the user’s trust and experience by helping safeguard online spaces from harmful content. A safer online space pushes its participants to be more open, thus better interaction and consequently community. Therefore, if the online service succeeded in establishing that trust, a greater proportion of the users would be more likely to both contribute and participate in the virtuous cycle of growth and engagement.

DM WebSoft LLP: Pioneering AI-Driven Online Safety

At DM WebSoft LLP, we understand the deeper meaning and impact AI has on online safety. Our commitment to state-of-art AI technologies continues beyond simple content moderation. The aim is the realization of complete, comprehensive solutions that would preempt likely risks, protect users’ privacy, and save the sanctity of online environments. Advanced safety—AI-based—measures would make it possible for platforms not to react to the hazard but to create spaces where safety goes arm in arm with freedom. The journey for a safer internet continues with AI at the helm.

With a clear realization of the immense potential that AI holds in the safety of digital space, companies such as DM WebSoft LLP still continue their approach toward innovation and user safety. The following section will try to apprehend the delicate balance between an AI-enhanced safety net and the preservation of online freedom, as a reflection of the balance that needs to be set in this domain.

Balancing Act: AI and Online Freedom

So, the very fine balance that has to be held within the digital governance landscape is whether the AI-driven content moderation enhances the exercise of online free speech. The balance of this finer point is akin to walking on a tightrope, where protection has to be balanced against public space from offending content with the utmost care against the basic right to freedom of expression. This section looks at the balance that needs to be attained to foster a safe and free digital ecosystem.

The Essence of Online Freedom

Central to the founding values of the internet is the ideal of freedom: to express, inquire, assemble, and innovate. This freedom is not a by-product of the open architecture of the internet but, in fact, an integral part of it, without which it would not be possible to have the internet itself.

But at the same time, there is no denying the fact that it also sets the pace for creativity, sparks dialogue, and fuels social progress—a part of human life. At the same time, this freedom allows paths for misuse, and consequently, there is a felt need for governance.

AI’s Role in Content Moderation: A Double-Edged Sword

AI can quickly analyze data and recognize patterns to a very high degree, so that this seems to be a very hopeful way to deal with content moderation problems. But its deployment poses hard questions of overreach. Suppression of legitimate discussions: a consequence of such overreach? It is exactly this high risk of false positives—where AI might unwittingly censor non-violent speech under the defensive umbrella of moderation—that one is called to consider, which again makes transparency and accountability of AI algorithms crucial.

Striking the Right Balance

For that, it would need another type of an approach, a mix that would bring transparency, user empowerment, and unceceased oversight to the table. Transparency in AI moderation processes and operations shall take place in a manner that shall allow the user to understand how and why moderation of content takes place. This would provide users with greater control not only over the filters but over the type of content they might want to see, thereby increasing individual power or autonomy in their respective online experiences. The ongoing AI and human review oversight implies over-moderation should be less likely.

The Role of Policy and Ethical Frameworks

This, therefore, makes it necessary to clearly define rules following a very high level of ethics in using AI in content moderation. The frameworks will need to encompass both technical bases upon which the AI gets used and ethical considerations. These include the privacy, freedom of speech, and perils the world is exposed to with AI bias.

What is fundamental, however, and has been emphasized by many players in the community, is the necessity of collaboration between tech companies, civil society, and policymakers to articulate policies that respect the freedoms of the online world and at the same time The quest to balance AI-driven content moderation with the preservation of online freedom is ongoing.

Therefore, this needs a very closely working effort based on technological innovation but at the same time firmly holding human rights and ethical principles. And moving forward, the wisdom of the world community is going to be so very important on how to negotiate this very difficult terrain to ensure that the digital world does not, in fact, become an arena where freedom of speech but also freedom of safety for everybody is time and again curtailed.

The Future of Content Moderation Technologies

With a new generation in digital communication round the corner, the future of content moderation technologies stands unfurling with the bearing of promising vistas. The final section envisions the future developments and innovations, reflecting the digital environment where safety, freedom, and technology join their resources for further online community building in such a way that it becomes more tolerant and respectful towards certain categories of users.

Integration of Advanced AI and Human Insight

In future, it projects a better blend of AI technologies with human expertise. The AI systems would then advance beyond the pattern of recognition to incorporate emotional intelligence and understanding of culture. These advances will equip moderation tools with an understanding of the context of the delicate and nuanced interactions between humans, decreasing the possibility of error while making moderation decisions that are just and knowledgeable.

Personalized Moderation Experiences

New technologies will allow for personalized, fine-tuned content moderation settings as never before. Such an approach would be user-centric if it were so that the opportunity to set independently, without the need to adjust, the frames of admissible content based on personal sensitivities and cultural peculiarities, would be possible for every individual in an environment where mutual respect has to be established.

Proactive and Predictive Moderation

As newer machine learning algorithms dawn, content moderation systems will graduate from only being reactive to having both proactive and predictive abilities. Proactive in the sense that they will prevent safeguard online spaces from potential problems through the prediction of issues and treading before they become bigger and, of course, effectively dealing with harmful content well in advance.

Transparency and Accountability

While AI continues to take over content moderation, increasing transparency and accountability for such platforms will be an imperative premium. Future technologies are likely to have mechanisms for auditability and oversight that allow people who use them to understand and question what the systems recommend. Such an approach would actually inculcate trust for the platform on the part of its users and make the moderation processes more fair and just.

Collaborative Global Efforts

The moderation is practically called for through a collaborative approach in the World Wide Web space. New technologies are also expected and, in a sense, contributing to stronger possibilities for closer cooperation among platforms, government bodies, and civil society to enable ways of establishing common standards and sharing best practices. It is imperative that collective efforts be put together in solving some of the challenges that cut across the platforms for a united position in matters of online safety.

The future of the technologies involved in content moderation is promising for a safe and much more inclusive internet. Built on these principles, the evolution of technology will indeed reshape our communication approaches within cyberspace and shall duly facilitate building online environments that are truly free for everyone to express, learn, and connect with due respect and understanding. The road ahead is one not only of continual innovation and collaboration but rather devotion to the very ideals that make the digital world the cornerstone of modern society.

Conclusion

In conclusion, from the analytical lenses of AI in Content Moderation: Balancing Freedom and Safety Online, the significant imprint of AI in molding the digital landscape has been well noted. The journey brings out the myriad ways of content moderation, the very important role that AI has in advancing online safety, the fine balance between community safety and freedom, and the promising frontiers ahead with the growing number of AI technologies around content moderation.

Artificial intelligence—a technology with unparalleled abilities to analyze large volumes of data—has become an indispensable ally in our quest to create safe online havens. As is seen in previous chapters, however, this technological power needs to be wielded most carefully in order to enable and not undermine the free enablers of the internet to express themselves openly and be creative.

From the above, the future is promising. AI trends will suggest even more sophisticated, user-centric solutions for moderation. They will not only tackle today’s problems and challenges but will also predict solutions that would have to be developed for needs lying in the future to make sure that the internet remains a positive, meaningful space of interaction.

This development is founded on the collective commitment to a digital ecosystem that respects diversity, includes freedom, and safety. This is the vision with which we shall move forward and guide our efforts to make the world of digital an infinite space of potential and opportunity.

In essence, AI for content moderation is an ever-learning, innovating, and collaborative journey. It’s a road we’re walking down together: policymakers, technologists, users, and all the others who have a stake in common pursuit of a future digital that’s safe, free, and open for all.

AI content moderation refers to the use of artificial intelligence technologies, specifically machine learning and natural language processing, to systematically review and manage user-generated content across digital platforms, for policy compliance and legal standards towards a safe environment.

Balancing safety with freedom of expression needs AI algorithms that are intricate enough to determine harmful content exactly and not fall into the overenforcement traps, which will choke free speech. This is highly dependent on the continuous improvement in the AI models and human reviews that pertain to contentious or complex cases.

At the same time, while offering efficiency and scalability, AI cannot replace human intuition and understanding, especially where the content is complex and sensitive to its context. In so doing, the best moderation strategies are those that follow a hybrid approach, using the speed and scalability that AI can offer while recognizing the irreplaceable value of human judgment and empathy.

It is no more satisfactory to have AI in content moderation, which tackles context and cultural nuances while keeping the algorithms updated to changing societal norms and legal regulation, transparency, and fairness of the user in moderation decisions paramount, all while keeping the privacy of the users first.

Future AI in content moderation will be much more developed and sophisticated, bringing far better user-specific personalization of, in particular, the settings of the moderation process, ensuring higher transparency and accountability of AI systems, and deepening cooperation of the platforms and stakeholders on the way of building online communities, which are safe and tolerant.

Get Started Now !

What’s the Process ?

Request a Call

Consultation Meeting

Crafting a Tailored Proposal

Get Started Now !

Real Stories, Real Results. Discover What Our Clients Say

Working with DM WebSoft LLP was a game-changer for our business. Their technical prowess and innovative solutions transformed our online presence. A highly recommended web development agency with a stellar track record.

We are thrilled with the results DM WebSoft LLP delivered. Their deep understanding of web development coupled with years of expertise ensured a seamless and visually stunning website. True professionals!

In a digital age where first impressions matter, DM WebSoft LLP crafted a website that speaks volumes. The team’s attention to detail and commitment to quality set them apart. Thank you for making our vision a reality.

DM WebSoft LLP’s team demonstrated unparalleled expertise. Their ability to navigate complex technical challenges with ease is truly commendable. Choosing them for our web development needs was the best decision.

Exceptional service, unmatched skills! DM WebSoft LLP stands out as a leading web development agency. Their collaborative approach and commitment to excellence make them our go-to partner for all things web-related.

DM WebSoft LLP turned our ideas into a digital masterpiece. The seamless communication and timely delivery of our project showcased their professionalism. Highly impressed with the level of creativity and skill.

Our experience with DM WebSoft LLP was nothing short of amazing. From concept to execution, their team provided top-notch web development services. A reliable partner for businesses looking to elevate their online presence.

DM WebSoft LLP’s team of tech experts is second to none. Their wealth of experience reflects in the quality of their work. Our website not only meets but exceeds industry standards, thanks to their dedication.

Choosing DM WebSoft LLP was the best investment for our web development needs. Their team’s proficiency, coupled with a customer-centric approach, made the entire process smooth and enjoyable. A pleasure to work with!